Abstract

The antibiotic pipeline continues to diminish and the majority of the public remains unaware of this critical situation. The cause of the decline of antibiotic development is multifactorial and currently most ICUs are confronted with the challenge of multidrug-resistant organisms. Antimicrobial multidrug resistance is expanding all over the world, with extreme and pandrug resistance being increasingly encountered, especially in healthcare-associated infections in large highly specialized hospitals. Antibiotic stewardship for critically ill patients translated into the implementation of specific guidelines, largely promoted by the Surviving Sepsis Campaign, targeted at education to optimize choice, dosage, and duration of antibiotics in order to improve outcomes and reduce the development of resistance. Inappropriate antimicrobial therapy, meaning the selection of an antibiotic to which the causative pathogen is resistant, is a consistent predictor of poor outcomes in septic patients. Therefore, pharmacokinetically/pharmacodynamically optimized dosing regimens should be given to all patients empirically and, once the pathogen and susceptibility are known, local stewardship practices may be employed on the basis of clinical response to redefine an appropriate regimen for the patient. This review will focus on the most severely ill patients, for whom substantial progress in organ support along with diagnostic and therapeutic strategies markedly increased the risk of nosocomial infections.

Similar content being viewed by others

Introduction

The report of successful treatment of life-threatening infections early in the 1940s opened the “antibiotic era”. Stimulated by a widespread use during the Second World War and by an impressive industrial effort to develop and produce antibiotics, this major progress in the history of medicine is nowadays compromised by the universal spread of antibiotic resistance which has largely escaped from hospitals to project the human race into the post-antibiotic era [1].

Looking back at this incredible 75-year-long saga, we should emphasize that antibiotic resistance was described in parallel with the first antibiotic use and that a direct link between exposure and resistance was recognized in the late 1940s [2].

This review will focus on the most severely ill patients for whom significant progress in organ support as well as diagnostic and therapeutic strategies markedly increased the risk of developing hospital-acquired infections (HAI), and currently most ICUs are confronted with the challenge of multidrug-resistant organisms (MDR) [3].

Epidemiology of highly resistant bacteria worldwide with a focus on Europe

Antimicrobial multidrug resistance (MDR) is now prevalent all over the world [4, 5], with extreme drug resistance (XDR) and pandrug resistance (PDR) [6] being encountered increasingly often, especially among HAI occurring in large highly specialized hospitals treating patients. Emergence of antimicrobial resistance is largely attributed to the indiscriminate and abusive use of antimicrobials in society and particularly in the healthcare setting and by an increasing spread of resistance genes between bacteria and of resistant bacteria between people and environments. Even in areas hitherto known for having minor resistance problems, 5–10 % of hospitalized patients on a given day harbored extended-spectrum β-lactamase (ESBL)-producing Enterobacteriaceae in their gut flora, as seen in a recent French study conducted in ICU [7].

The number of different resistance mechanisms by which microorganisms can become resistant to an agent is increasing, the variety in resistance genes is increasing, the number of clones within a species which carry resistance is increasing, and the number of different species harboring resistance genes is increasing. All this leads to an accelerating development of resistance, further enhanced by the fact that the more resistance genes there are, the higher the probability that one or more of them will end up as a so-called successful clone, with exceptional abilities to spread, infect, and cause disease [8]. When this happens, the world faces major “outbreaks (epidemics) of antimicrobial resistance”. Sometimes these are local and occur as outbreaks of Staphylococcus aureus, Escherichia coli, Klebsiella pneumoniae, Acinetobacter baumannii, Pseudomonas aeruginosa, or Enterococcus faecalis or E. faecium (the latter species being very difficult to treat when glycopeptide resistant) in highly specialized healthcare settings such as neonatal wards or other ICUs, hematological wards, and transplant units. With the aforementioned bacteria, major problems evolved in the USA where outbreaks occurred in transplant units on the east coast and in Israel, Greece, and later Italy. Today smaller or larger outbreaks with multidrug-resistant E. coli and K. pneumoniae are seen all over the world. Recently several of these clones also show resistance to last resort agents like colistin making the situation desperate in some areas, in some hospitals, and for some patients. Since there is no influx of truly new antimicrobial agents and limited evidence of reversibility [9] of antimicrobial resistance, the future looks grim. Another sign of our desperation is the increasing interest in trying to redevelop old antimicrobials [10].

Microbiological issues: breakpoints, epidemiological cut-offs, and other susceptibility and identification problems

Methods in clinical microbiology for species identification and antimicrobial susceptibility testing (AST) have recently improved dramatically. Consequently, it is today possible for the clinical microbiology laboratory to drastically improve its turnaround time (the time used by the laboratory to receive, process, and make available a report).

Time of flight mass spectrometry has brought down the time required for species identification to 1 h from 1 day, and sometimes several days, for both bacteria and fungi. Blood cultures are most often positive within 8–20 h from the start of incubation [11]. With the novel techniques, species identification is feasible directly on the positive blood culture bottle [12] and within 1 h from a positive blood culture signal. Time wasted on transportation of blood culture bottles becomes important. Ideally bottles should be under incubation within 1 h from inoculation; if this time exceeds 4 h it constitutes malpractice.

AST can be performed using traditional methods for phenotypic antimicrobial susceptibility testing. These are based on the minimum inhibitory concentration (MIC) of the antibiotic and the application of breakpoints to categorize isolates as susceptible (S), intermediate (I), and resistant (R). AST can also be performed using genotypic methods, most commonly in the form of direct gene detection using polymerase chain reaction methods for the detection of specific resistance genes, such as the mecA gene encoding for methicillin-resistance in staphylococci or the vanA gene encoding for glycopeptide resistance in enterococci and staphylococci. The research community is currently exploring the use of whole genome sequencing for AST. The advantage of phenotypic methods is that they are quantifiable and can predict both sensitivity and resistance. Phenotypic methods, both MICs and disk diffusion, traditionally need 18 h of incubation—constituting the classical “overnight” incubation. However, the incubation time can, if traditional systems are recalibrated, be brought down to 6–12 h depending on the microorganism and the resistance mechanism. The advantage of the genotypic methods is that they are rapid and specific but so far they predict only resistance and they are not quantitative.

There is now international agreement on a standard method for the determination of the MIC, but for breakpoints there is still more than one system. Europe had for many years seven systems in use, including the Clinical and Laboratory Standards Institute (CLSI) system from the USA. The European Committee on Antimicrobial Susceptibility Testing (EUCAST) managed in the period 2002–2010 to unite the six European national systems and harmonize systems and breakpoints in Europe. Since then, many non-European countries have joined EUCAST. Both EUCAST [13] and CLSI [14] breakpoints are available in all internationally used susceptibility testing methods. EUCAST recommendations are freely available on the Internet [15], whereas the CLSI recommendations must be purchased. As a general rule, clinical breakpoints from EUCAST are somewhat lower than CLSI breakpoints. This is mainly because EUCAST breakpoints were systematically revised on the basis of recent information, whereas many breakpoints from CLSI were neither reviewed nor revised for more than 15–25 years.

Antibiotic stewardship

Targeted at education to provide assistance for optimal choice, dosage, and duration of antibiotics to improve outcome and reduce the development of resistance, antibiotic stewardship programs for critically ill patients translated into the implementation of specific guidelines, largely promoted by the Surviving Sepsis Campaign [16, 17]. Very early and adequate antibiotic treatment significantly improved the outcome of critically ill patients suffering from severe infections [18].

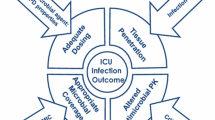

However, the very early start of antibiotics decreased the proportion of microbiological documentation which is mandatory for a successful subsequent de-escalation [19]. Adequate coverage for potential resistant microorganisms results in a vicious circle characterized by a progressive enlargement of the spectrum to be covered (Fig. 1). The concept of antibiotic stewardship then progressively evolved to individualize prescriptions by the introduction of new concepts such as avoiding unnecessary administration of broad-spectrum antibiotics and systematic de-escalation [20].

Vicious circle starting from the implementation of early and adequate empirical antibiotic treatment. Adequate coverage for potential resistant microorganisms results in vicious circle characterized by the need to enlarge the spectrum to be covered, with further continuous increase of the proportion of resistant microorganisms resulting in a progressive increase of inadequate empirical treatments and death from bloodstream infections (BSI), ventilator-associated pneumonia (VAP), and surgical site infections (SSI)

This has been further demonstrated to have a positive impact in critically ill settings. A systematic review showed that despite the relative low level of the 24 studies published from 1996 to 2010, including only three randomized prospective studies and three interrupted time series, antibiotic stewardship was in general beneficial [21]. This strategy has shown a reduction of the use of antimicrobials (from 11 to 38 % of defined daily doses), a lower antimicrobial cost (US$5–10 per patient day), a shorter average duration of treatment, less inappropriate use, and fewer adverse events. Interventions beyond 6 months resulted in reductions in the rate of antimicrobial resistance. Importantly, antibiotic stewardship was not associated with increases in nosocomial infection rates, length of stay, or mortality.

Moreover, in the context of high endemicity for methicillin-resistant S. aureus (MRSA), antibiotic stewardship combined with improved infection control measures achieved a sustainable reduction in the rate of hospital-acquired MRSA bacteremia [22].

Accordingly, as strongly recommended by ICU experts and supported by several national and international initiatives, antibiotic stewardship programs should be developed and implemented in every ICU or institution in charge of critically ill patients (Table 1).

Treatment strategies

Adequate, prompt therapy and duration

Inappropriate antimicrobial therapy, meaning the selection of an antibiotic to which the causative pathogen is resistant, is a consistent predictor of poor outcomes in septic patients [23]. On the other hand, several studies have shown that prompt appropriate antimicrobial treatment is a life-saving approach in the management of severe sepsis [24–26]. The concept of “adequate antimicrobial therapy” was defined as an extension of “appropriate antimicrobial therapy”, meaning appropriate and early therapy at optimized doses and dose intervals.

The most impressive data probably comes from Kumar et al. [27], who showed that inadequate initial antimicrobial therapy for septic shock was associated with a fivefold reduction in survival (52.0 vs. 10.3 %), remaining highly associated with risk of death even after adjustment for other potential risk factors (odds ratio/OR 8.99). Differences in survival were seen in all major epidemiologic, clinical, and organism subgroups, ranging from 2.3-fold for pneumococcal infection to 17.6-fold for primary bacteremia. This obviously means that, in severe infections, antimicrobial therapy must almost always be empiric, before isolation and identification of the causative organism and determination of the organism’s sensitivity, to achieve a timely initiation.

Unfortunately, the rising incidence of MDR microorganisms leads, at least, to one of two consequences: increased incidence of inappropriate antimicrobial therapy or higher consumption of broad-spectrum antibiotics [28]. The way out of this vicious spiral is to assume that the best antibiotic selection is the choice of the antibiotic with the best possible combination of high effectiveness for infection cure and relapse avoidance on the one hand and low collateral damage on the other.

In a recent multicenter study on hospital-acquired bacteremia [25], the incidence of MDR and XDR microorganisms was 48 and 21 %, respectively; in the multivariable model, MDR isolation and timing to adequate treatment were independent predictors of 28-day mortality. The same occurs in the community, with rising prevalence of MDR bacteria, for instance, among patients with community-acquired pneumonia who were admitted to the ICU (7.6 % in Spain and 3.3 % in the UK) [29]. Therefore, there is evidence that infection by MDR bacteria often results in a delay in appropriate antibiotic therapy, resulting in increased patient morbidity and mortality, as well as prolonged hospital stay [30]. Conversely, there is also proof that appropriate initial antibiotic therapy, early ICU admission, and maximized microbiological documentation are modifiable process-of-care factors that contribute to an improved outcome [31].

To help in reducing the antibiotic treatment duration, the most promising parameters appear to be plasma levels of procalcitonin (PCT). Besides PCT, no other sepsis biomarker has achieved universal use throughout different healthcare settings in the last decade. High PCT concentrations are typically found in bacterial infection, in contrast to lower levels in viral infection and levels below 0.1 ng/mL in patients without infection. Furthermore, serum PCT concentrations are positively correlated with the severity of infection. Thus, adequate antibiotic treatment leads to a decrease in serum PCT concentrations. A recent metanalysis showed a significant reduction of the length of antibiotic therapy in favor of a PCT-guided therapy strategy [32].

Monotherapy versus combination therapy

Basically, there are two reasons to favor antibiotic combination therapy over monotherapy. Firstly, there is a potential synergy of combined antibiotics in order to maximize clinical efficacy or prevent development of resistance. Synergistic effects, however, were proven in vitro for many combinations of antibiotics, but a clear benefit has only been demonstrated in vivo in the treatment of invasive pneumococcal disease and in toxic shock syndrome. In these two clinical situations, the combination of β-lactams with macrolides or lincosamides, respectively, was able to inhibit bacterial pathogenicity factors and was associated with improved survival. The second reason for the selection of a combination therapy is the desired extension of the expected spectrum of pathogens (so-called gap closing). However, none of the randomized controlled trials (RCTs) in patients with sepsis could demonstrate any advantage of combination therapies. A meta-analysis of RCTs comparing the combination therapy of a β-lactam plus an aminoglycoside versus a monotherapy with a beta-lactam alone did not show any benefits of the combination therapy in terms of morbidity and mortality [33]. Similarly, a subgroup analysis of septic patients in a Canadian ventilator-associated pneumonia (VAP) study comparing meropenem monotherapy with meropenem/ciprofloxacin combination therapy also found no differences in morbidity, mortality, or adverse reactions between the groups [34]. Finally, the MAXSEPT study of the German Study Group Competence Network Sepsis (SepNet) comparing meropenem with meropenem/moxifloxacin combination in severe sepsis and septic shock could not demonstrate a difference between the groups either for changes in SOFA scores or for 30-day mortality [35].

Results in favor of combination therapy were found in cohort studies only, in which benefits of combination therapy have been shown in terms of lower mortality, especially in patients with septic shock [36, 37]. The drawback of these studies was that various β-lactams and various combination partners were chosen.

An important difference between these observational studies and RCTs should be mentioned; the former were conducted in countries with significantly higher rates of MDR pathogens than the latter. In both the Canadian and the MAXSEPT studies mentioned above, resistant pathogens were found in less than 10 % of cases. This suggests that combination therapy may be rational when, as a result of the anticipated resistance pattern, treatment failure of a β-lactam monotherapy is likely. Several observational studies looking at carbapenem-resistant (CR) pathogens demonstrated a survival benefit for a combination therapy using a carbapenem together with colistin and/or tigecycline in comparison to meropenem alone [38, 39]. In addition, intravenous fosfomycin has been recently administered as part of combination regimens in patients with XDR K. pneumoniae infections to improve the effectiveness and decrease the rate of emergence of resistance [40]. Also aminoglycosides have been recently used in combination regimens in patients with difficult-to-treat infections, including XDR K. pneumoniae infections [41].

In conclusion, combination therapy should be recommended only in patients with severe sepsis and when an infection with a resistant organism is likely. De-escalation to an effective monotherapy should be considered when an antibiogram is available.

De-escalation

De-escalation of antimicrobial therapy is often advocated as an integral part of antibiotic stewardship programs [16, 17] and has been defined as reducing the number of antibiotics to treat an infection as well as narrowing the spectrum of the antimicrobial agent. Essentially this is a strategy that is applicable after starting a broad-spectrum empirical therapy prior to identification of the causative pathogen. Intuitively this would limit the applicability of de-escalation as a concept in MDR infections as after identification of an MDR pathogen, often the opposite—escalation of antimicrobial therapy—is required. Depending on the pathogen involved, even multiple antibiotics may be required, even with the same antibiotic class such as combination therapy with ertapenem and another carbapenem for K. pneumoniae carbapenemase (KPC) producers.

Not surprisingly, several clinical studies found that the presence of MDR pathogens was a motivation not to de-escalate [42, 43], even in situations where the patient was colonized with MDR organisms at sites other than the infection site. Other studies have reported de-escalation to be safe in MDR-colonized patients [44]. This does, however, not mean that the concept of de-escalation is not applicable in MDR infections. Depending on antibiotic susceptibility, an antimicrobial agent with a narrower spectrum may be available, or combination therapy may be stopped after initial therapy. If the infection is resolving, de-escalation may prove a valid option in order to avoid further antibiotic pressure [45]. However, the value of de-escalation in the setting of MDR infections has not been extensively explored and application should be considered on an individual patient basis [45]. Retrospective analyses have found de-escalation to be a safe approach when applied in selected patients [23], but in a recent, non-blinded RCT [19], de-escalated patients had more superinfections and increased antibiotic use. Although intuitively logical and frequently suggested [16, 46, 47], de-escalation of antibiotic therapy has not been clearly associated with lower rates of antibiotic resistance development.

PK/PD optimization

It is known that antimicrobial resistance, as defined in the clinical laboratory, often translates into insufficient in vivo exposures that result in poor clinical and economic outcomes [48]. In addition to resistance, it is now becoming increasingly recognized that the host’s response to infection may in and of itself contribute to considerable reductions in antimicrobial exposures due to alterations in the cardiovascular, renal, hepatic and pulmonary systems [49]. A recent ICU study has highlighted the potential impact of adaptations in the renal system as more than 65% of these critically ill patients manifested augmented renal function, defined by a creatinine clearance ≥130 mL/min/1.73 m2, during their initial week of hospitalization. This finding is particularly concerning because the backbones of most antimicrobial regimens in the ICU such as penicillins, cephalosporins or carbapenems are predominantly cleared via the renal route [50]. To this end, Roberts and colleagues have recently reported the results of a prospective, multinational pharmacokinetic study to assess β-lactam exposures in the critically ill population [51]. In that study, the investigators noted that among 248 infected patients, 16% did not achieve adequate antimicrobial exposures and that these patients were 32% less likely to have a satisfactory infection outcome. While a personalized approach to dosing that is based on a given patient’s specific pharmacokinetic profile for β-lactams is not yet the standard of practice as might be expected for the aminoglycosides and vancomycin due to lack of routinely available drug assays, the pharmacokinetic and pharmacodynamic (PK/PD) profile of β-lactams as well as other agents may be incorporated into treatment algorithms to optimize outcomes. To this end, we developed and implemented pharmacodynamically optimized β-lactam regimens which incorporated the use of higher doses as well as prolonged or continuous infusion administration technique to enhance in vivo exposures in patients with VAP [52]. Utilization of these regimens was shown to improve the clinical, microbiologic and economic outcomes associated with this ICU based infection. Clinicians need to recognize that in the early stages of infection, alterations in metabolic pathways as well as the reduced susceptibility of target pathogens may result in inadequate antimicrobial exposures if conventional dosing regimens are utilized. Therefore, pharmacodynamically optimized dosing regimens should be given to all patients empirically and once the pathogen, susceptibility and clinical response are known, local stewardship practices may be employed to redefine an appropriate regimen for the patient [53].

Infection control measures

Depending on the ICU setting, one-third to up to half of patients may develop a nosocomial infection. Unfortunately, host susceptibility to infection can only be slightly modified; the presence of microorganisms and the high density of care are unavoidable. Consequently, the prevention of infection dissemination relies on eliminating the means of transmission by the use of infection control measures [54]. The global idea of isolation precaution combines the systematic use of standard precautions (hand hygiene, gloves, gowns, eye protection) and transmission-based precautions (contact, droplet, airborne) [55] (Table 2). Conceptually, the objective is not to isolate, but to prevent transmission of microorganisms by anticipating the potential route of transmission and the measures to be applied for each action of care.

Physical contact is the main route of transmission for the majority of bacteria; however, this seems not to be true for certain bacteria, namely MRSA, as demonstrated in a recent study [56]. It occurs via the hands of healthcare workers (HCWs) from a patient or contaminated surfaces/instruments nearby to another patient during the process of care. Hand hygiene (hand washing and alcohol hand-rub), patient washing, and surface cleansing efficiently reduce transmission [57, 58]. Transmission can occur via droplet or airborne particles. Specific transmission-based precautions required to avoid infection are summarized in Table 2.

Isolation precautions have been widely diffused and they are nowadays the cornerstone of preventive measures used to control outbreaks, to decrease the rate of resistant microorganisms (MRSA, ESBL) and the spread of emergent infectious diseases such as respiratory viruses (SARS, influenza, corona virus) or viral hemorrhagic fevers. In this context, enhanced adherence to appropriate isolation precautions can markedly decrease resistance dissemination and potentially further need for broad-spectrum antibiotics.

Selective decontamination of the digestive tract (SDD) has been proposed to prevent endogenous and exogenous infections and to reduce mortality in critically ill patients. Although the efficacy of SDD has been confirmed by RCTs and systematic reviews, SDD has been the subject of intense controversy based mainly on insufficient evidence of efficacy and on concerns about resistance. A recent meta-analysis detected no relation between the use of SDD and the development of antimicrobial resistance, suggesting that the perceived risk of long-term harm related to selective decontamination cannot be justified by available data. However, the conclusions of the study indicated that the effect of decontamination on ICU-level antimicrobial resistance rates is understudied [59].

Although SDD provides a short-term benefit, neither a long-term impact nor a control of emerging resistance during outbreaks or in settings with high resistance rates can be maintained using this approach.

In the era of carbapenem resistance, antimicrobials such as colistin and aminoglycosides often represent the last option in treating multidrug-resistant Gram-negative infections. In this setting, the use of colistin should be carefully considered and possibly avoided during outbreaks due to resistant Gram-negative bacilli [60].

New therapeutic approaches

The antibiotic pipeline continues to diminish and the majority of the public remains unaware of this critical situation. The cause of the decline of antibiotic development is multifactorial. Drug development, in general, is facing increasing challenges, given the high costs required, which are currently estimated in the range of US$400–800 million per approved agent. Furthermore, antibiotics have a lower relative rate of return on investment than do other drugs because they are usually used in short-course therapies. In contrast, chronic diseases, including HIV and hepatitis, requiring long-term and maybe lifelong treatments that suppress symptoms, represent more rational opportunities for investment for the pharmaceutical industry. Ironically, antibiotics are victims of their own success; they are less desirable to drug companies because they are more successful than other drugs [61].

Numerous agencies and professional societies have highlighted the problem of the lack of new antibiotics, especially for MDR Gram-negative pathogens. Since 2004 repeated calls for reinvigorating pharmaceutical investments in antibiotic research and development have been made by the Infectious Diseases Society of America (IDSA) and several other notable societies, including Innovative Medicines Initiative (Europe’s largest public–private initiative) which funds COMBACTE [62, 63]. IDSA supported a program, called “the 10 × ′20 Initiative”, with the aim to develop ten new systemic antibacterial drugs by 2020 through the discovery of new drug classes or new molecules from already existing classes of antibiotics [63].

The current assessment of the pipeline (last updated August 2014) shows 45 new antibiotics in development or recently approved (Table 3). Of those, 14 are in phase 1 clinical trials, 20 in phase 2, seven in phase 3 (a new drug application has been submitted for one, and three were recently approved) [64]. Five of the seven antibiotics in phase 3, as well as one drug submitted for review to the US Food and Drug Administration (FDA), have the potential to address infections caused by MDR Gram-negative pathogens, the most pressing unmet need [65]. Unfortunately there are very limited new options for Gram-negative bacteria such as carbapenemase-producing Enterobacteriaceae, XDR A. baumannii, and P. aeruginosa. Aerosol administration of drugs seems to be a promising new approach for treatment of MDR lung infections. Nebulized antibiotics achieve good lung concentrations and they reduce risk of toxicity compared with intravenous administration. A new vibrating mesh nebulizer used to deliver amikacin achieved high concentrations in the lower respiratory tract. A tenfold higher concentration than the MIC90 of bacteria that are normally responsible for nosocomial lung infections (8 μg/mL for P. aeruginosa) was documented in epithelial lining fluid for amikacin [66].

Pathogen-based approach

ESBL producers

Infections caused by extended-spectrum β-lactamase-producing Εnterobacteriaceae (ESBL-PE) are difficult to treat owing to the resistance of the organisms to many antibiotics [67]. Since 2010 EUCAST and CLSI recommended the use of alternatives to carbapenems to treat these organisms. Indeed on the basis of antimicrobial susceptibility testing, β-lactam/β-lactamase inhibitors (BLBLIs) and specific fourth-generation cephalosporins (i.e., cefepime) with greater stability against β-lactamases could be theoretically used to treat ESBL-PE infections [68]. As far as we know these “alternatives” to carbapenems have not been evaluated in critically ill patients. Outside the ICU, the data are still scarce and conflicting [69]. A post hoc analysis of patients with bloodstream infections due to ESBL-E. coli from six published prospective cohorts suggested that BLBLI (including amoxicillin–clavulanic acid and piperacillin–tazobactam) were suitable alternatives to carbapenems [70]. A meta-analysis by Vardakas et al. [71] compared carbapenems and BLBLIs for bacteremia caused by ESBL-producing organisms; taking into account the considerable heterogeneity in the trials included, the fact that none was powered to detect outcome differences and the fact that most severely ill patients tended to receive carbapenems, there was no statistically significant difference in mortality between patients receiving as empirical or definitive therapy BLBLIs or carbapenems. Regarding the use of cefepime in this specific situation, the data available are more confusing but it seems that the use of this β-lactam is safe in case of infection with isolates with an MIC value of 1 mg/L or less [72–74]. For critically ill patients, dosages of 2 g every 12 h or higher are probably preferred. As suggested in recent studies in this specific situation, practitioners should be aware of the risk of suboptimal dosage.

According to all the recent data, for critically ill patients carbapenems are still preferable to alternatives as empirical therapy when ESBL-PE is suspected. Alternatives as definitive therapy could be possible once susceptibilities are known. However, high dosage and semi-continuous administration of β-lactams should be preferred.

Carbapenem-resistant Enterobacteriaceae (CRE)

The vast majority of CRE isolates are resistant to the most clinically reliable antibiotic classes leaving colistin, tigecycline, and gentamicin as the main therapeutic approaches, whereas several reports have revealed high risk of mortality associated with these infections [38, 75, 76]. Given the lack of data from randomized clinical trials, therapeutic approaches in CRE infections are based on the accumulating clinical experience and particularly from infections by K. pneumoniae producing either KPC or Verona integron-mediated metallo-β-lactamase (VIM). Recent evidence supports combination treatment containing two or three in vitro active drugs, revealing significant advantages over monotherapies in terms of survival [38, 39, 77, 78]. Although paradoxical, since KPC enzymes hydrolyse carbapenems, the most significant improvement seems to be obtained when the combination includes a carbapenem, providing substantial survival benefit in patients who are more severely ill and/or those with septic shock [78, 79]. Carbapenems’ in vivo activity against CRE was compatible with MICs reaching 8–16 mg/L [38, 77, 78], probably attributed to an enhanced drug exposure with high-dose/prolonged-infusion regimens of carbapenems. Aminoglycoside-containing combinations, particularly gentamicin, were associated with favorable outcomes compared to other combinations and could serve as a backbone, particularly in view of increasing rates of colistin resistance [38, 39, 78]. Colistin and tigecycline represent the remaining agents to be selected for the combination, based on the sensitivity pattern. A recently reported clinical success of 55 % in the treatment of infections by XDR and PDR pathogens with combinations of fosfomycin make it another therapeutic candidate, particularly in the treatment of Enterobacteriaceae against which susceptibility rates are promising [80]. High doses (up to 24 g/day) and avoidance of monotherapy are strongly recommended in the setting of critically ill patients with MDR pathogens.

Failing monotherapies with colistin or tigecycline may be explained by a suboptimal exposure to the drug; recent PK/PD data favor dose escalation compared to the initially recommended dose regimens. A small single-center non-comparative study employing a loading dose (LD) of 9 MIU followed by 4.5 MIU bid and adaptation according to renal function [81, 82] showed that colistin monotherapy might be adequate [83]. A concise guide to optimal use of polymyxins is shown in the Electronic Supplementary Material. Higher doses up to 200 mg/day may optimize tigecycline PKs and result in improved clinical outcomes [84].

Double carbapenem combinations, consisting of ertapenem as a substrate and doripenem or meropenem as the active compound, have been recently proven successful in case series and small studies, even when the pathogen expressed high MIC to carbapenems [85]. Finally, decisions regarding the empiric antibiotic treatment of critically ill patients must be based on a sound knowledge of the local distribution of pathogens and on analysis of presence of risk factors for infection caused by CRE [76, 86].

Pseudomonas aeruginosa

P. aeruginosa, along with E. coli, K. pneumoniae, and A. baumannii, is a leading pathogen in the ICU setting, causing severe infections (VAP, bacteremia) with mortality directly related to any delay in starting an appropriate antibiotic therapy [87].

In 2011, high percentages of P. aeruginosa isolates resistant to aminoglycosides, ceftazidime, fluoroquinolones, piperacillin/tazobactam, and carbapenems were reported from several countries especially in Southern and Eastern Europe. Resistance to carbapenems was above 10 % in 19 of 29 countries reporting to the European Center for Diseases Control (ECDC); MDR was also common, with 15 % of the isolates reported as resistant to at least three antimicrobial classes. CR-resistant P. aeruginosa now accounts for about 20 % of the isolates in Italian ICUs, with few strains (2–3 %) being also resistant to colistin [88].

Primary regimens for susceptible isolates, depending on the site and severity of infection, are summarized in Table 4. A beta-lactam antibiotic with anti-pseudomonas activity is generally preferred and administered with extended infusion after a LD to rapidly achieve the pharmacodynamic target [89]. Although there is not clear evidence supporting the advantage of combination therapy (i.e., a β-lactam plus an aminoglycoside or a fluoroquinolone) over monotherapy [90], many clinicians adopt this regimen for serious infections (bacteremia, VAP) and in patients with severe sepsis and septic shock. When a combination therapy with an aminoglycoside (amikacin or gentamicin) is preferred, we recommend a maximum duration of 5 days.

For infection caused by a strain susceptible only to colistin, a regimen of high-dose colistin (9 MU LD, then 4.5 MU bid) is recommended. Nebulized administration of colistin is also considered for VAP, and intrathecal administration is required for meningitis [91]. The advantage of adding a carbapenem (in case of a non-carbapenem-susceptible strain) to colistin is unclear, and several experts prefer to use a combination showing synergistic activity “in vitro” (i.e., colistin plus rifampin). Fosfomycin shows variable in vitro activity against P. aeruginosa MDR/XDR strains and may be administered, mainly as part of a combination regimen, for systemic infections (4 g every 6 h). New drugs with activity against P. aeruginosa include ceftazidime/avibactam, a non-lactam inhibitor of class A and C β-lactamases and AmpC from P. aeruginosa, the new aminoglycoside plazomicin, and the combination of the new cephalosporin ceftolozane with tazobactam, which shows activity also against MDR and XDR P. aeruginosa strains and completed phase 3 trials for the treatment of complicated intra-abdominal infections (cIAIs) and complicated urinary tract infections (cUTIs) [65, 90].

Acinetobacter baumannii

A. baumannii has gained increasing attention because of its potential to cause severe infections and its ability in developing resistance to practically all available antimicrobials. Adequate empirical therapy of severe infections caused by A. baumannii is crucial in terms of survival [92].

The empirical treatment for A. baumannii infections often represents a challenge and might be considered in case of severe sepsis/septic shock and in centers with greater than 25 % prevalence of MDR A. baumannii [93]. Traditionally, carbapenems have been the drug of choice and are still the preferred antimicrobials for Acinetobacter infections in areas with high rates of susceptibility. Sulbactam is a bactericide against A. baumannii and represents a suitable alternative for A. baumannii susceptible to this agent. Unfortunately, a steady increase in the resistance to sulbactam in A. baumannii has been observed [94]. Nowadays, polymyxins are the antimicrobials with the greatest level of in vitro activity against A. baumannii [95, 96]. However, their indiscriminate use may contribute to further selection of resistance and may also expose patients to unnecessary toxicity. Thus, selection of patients who should receive empirical treatment covering Acinetobacter is essential. Colistin is the most widely used in clinical practice although polymyxin B seems to be associated with less renal toxicity [97]. The recommended doses of these antimicrobials are shown in Table 5. Tigecycline, active in vitro against a wide range of Gram-negative bacilli including A. baumannii, is approved in Europe for the treatment of complicated skin structure infections and intra-abdominal infections. Nevertheless, although diverse meta-analyses have warned about the increased risk of death in patients receiving tigecycline compared to other antibiotics particularly in HAP and VAP [98–100], a high dose regimen (Table 5), usually in combination with another antimicrobial, may be a valid alternative for severe infections including A. baumannii pneumonia [75, 101].

Although in vitro studies have demonstrated synergy of colistin with rifampin, a recent RCT demonstrated no improved clinical outcomes with the combination of colistin/rifampin while better eradication was achieved [102]. Different in vitro studies have documented the existence of an unforeseen potent synergism of the combination of colistin with a glycopeptide against carbapenem-resistant A. baumannii; however, a combination of colistin plus a glycopeptide in A. baumannii infections is actually discouraged [103, 104].

MRSA

The main treatment options for treating MRSA infections in critically ill patients include glycopeptides (vancomycin and teicoplanin), linezolid, and daptomycin; daptomycin is contraindicated for the treatment of pneumonia because of its inactivation by surfactant. Alternative anti-MRSA agents are tigecycline, for which there is a regulatory warning concerning possible small increased (unexplained) mortality risk [105], telavancin, which has associated warnings contraindicating its use notably in patients with renal failure [99], and ceftaroline, dalbavancin, and oritavancin, which have limited evidence for their efficacy in very severe infection. There have been numerous meta-analyses to compare the efficacy of the aforementioned agents in MRSA infection. Four meta-analyses are unusual in that they have assessed all possible treatment options [106–109], although only one study [107] examined all MRSA infection types (as opposed to MRSA cSSTIs). This latter (network meta-analysis) study identified 24 RCTs (17 for cSSTI and 10 for HAP/VAP) comparing one of six antibiotics with vancomycin. In cSSTI, linezolid and ceftaroline were non-significantly more effective than vancomycin. Linezolid ORs were 1.15 (0.74–1.71) and 1.01 (0.42–2.14) and ceftaroline ORs were 1.12 (0.78–1.64) and 1.59 (0.68–3.74) in the modified intention to treat (MITT) and MRSA m-MITT populations, respectively. For HAP/VAP, linezolid was non-significantly better than vancomycin, with ORs of 1.05 (0.72–1.57) and 1.32 (0.71–2.48) in the MITT and MRSA m-MITT populations, respectively. The data of the Zephyr trial suggested a clinical superiority of linezolid compared with vancomycin with higher rates of successful clinical response, acceptable safety and tolerability profile for the treatment of proven MRSA nosocomial pneumonia. Microbiologic responses paralleled clinical outcomes, and MRSA clearance was 30 % greater with linezolid than with vancomycin. A difference of at least 20 % persisted until late follow-up, suggesting that linezolid treatment may result in more complete bacterial eradication [110].

Uncertainties surrounding the relative efficacy of vancomycin have been fuelled by reports of worse outcomes in patients with MRSA infection caused by strains with elevated MICs. A recent meta-analysis of S. aureus bacteremia studies failed to find an overall increased risk of death when comparing cases caused by S. aureus exhibiting high-vancomycin MIC (at least 1.5 mg/L) with those due to low-vancomycin MIC (less than 1.5 mg/L) strains [111]. Outbreak of MRSA resistant to linezolid mediated by the cfr gene has been reported and was associated with nosocomial transmission and extensive usage of linezolid [112]. However, the authors cautioned that they cannot definitely exclude an increased mortality risk, and to emphasize this point it remains possible that specific MRSA strains/clones are associated with worse outcomes. Attempts to address elevated MICs and so improve target attainment by increasing vancomycin dosages are associated with more nephrotoxicity [113].

Clostridium difficile

Severe C. difficile infection (CDI) is characterized by at least one of the following: white blood cell count greater than 15 × 109/L, an acute rising serum creatinine (i.e., greater than 50 % increase above baseline), a temperature of greater than 38.5 °C, or abdominal or radiological evidence of severe colitis. There are currently two main treatment options for severe CDI: either oral vancomycin 125 mg qds for 10–14 days, or fidaxomicin, which should be considered for patients with severe CDI at high risk for recurrence [114]. The latter include elderly patients with multiple comorbidities who are receiving concomitant antibiotics. Metronidazole monotherapy should be avoided in patients with severe CDI because of increasing evidence that it is inferior to the alternatives discussed here [115]. In severe CDI cases who are not responding to oral vancomycin 125 mg qds, oral fidaxomicin 200 mg bid is an alternative; or high-dosage oral vancomycin (up to 500 mg qds, if necessary administered via a nasogastric tube), with or without iv metronidazole 500 mg tds. The addition of oral rifampicin (300 mg bid) or iv immunoglobulin (400 mg/kg) may also be considered, but evidence is lacking regarding the efficacy of these approaches. There are case reports of tigecycline being used to treat severe CDI that has failed to respond to conventional treatment options, but this is an unlicensed indication [116, 117].

In life-threatening CDI (i.e., hypotension, partial or complete ileus, or toxic megacolon) oral vancomycin up to 500 mg qid for 10–14 days via nasogastric tube (which is then clamped for 1 h) and/or rectal installation of vancomycin enemas plus iv metronidazole 500 mg three times daily are used [118], but there is a poor evidence base in such cases. These patients require close monitoring, with specialist surgical input, and should have their blood lactate measured. Colectomy should be considered if caecal dilatation is more than 10 cm, or in case of perforation or septic shock. Colectomy is best performed before blood lactate rises above 5 mmol/L, when survival is extremely poor [119]. A recent systematic review concluded that total colectomy with end ileostomy is the preferred surgical procedure; other procedures are associated with high rates of re-operation and mortality. Less extensive surgery may have a role in selected patients with earlier-stage disease [120]. An alternative approach, diverting loop ileostomy and colonic lavage, has been reported to be associated with reduced morbidity and mortality [121].

Conclusions

Current clinical practice relating to critically ill patients has been extremely challenged by the emergence of multidrug resistance among the commonly encountered pathogens. Treatment options seem to be more optimistic for Gram-positive pathogens (including C. difficile), for which the pipeline is more promising; however, the recently launched anti-MRSA agents have not been extensively investigated in critically ill populations. In the field of Gram-negative MDR infections there is great concern about the therapeutic future, as only a handful of the upcoming agents will address the unmet medical needs. Associations of beta-lactams with beta-lactamase inhibitors seem promising against Gram-negative MDR pathogens, but their real clinical utility will be known only after results of large clinical trials are available. Currently, the most effective approach is the PK/PD optimization of the available antibiotics, particularly given the increasing awareness of the pharmacokinetic alterations that occur in the critically ill patient. Combination treatments seem to be important, at least in the empirical phase of treatment, to ensure adequate coverage of the patient and improve clinical outcome. However, randomized clinical trials are urgently needed to define the possible benefit from combinations in various settings. Most importantly, infection control measures and prompt diagnostics are the cornerstones to prevent further transmission of MDR and XDR pathogens in healthcare settings and to optimize early antimicrobial treatment.

Abbreviations

- AST:

-

Antimicrobial susceptibility testing

- BLBLI:

-

β-Lactam/β-lactamase inhibitors

- CDI:

-

Clostridium difficile infection

- CRE:

-

Carbapenem-resistant Enterobacteriaceae

- ECDC:

-

European Center for Diseases Control

- ESBL:

-

Extended-spectrum β-lactamases

- ESBL-PE:

-

Extended-spectrum β-lactamase-producing Εnterobacteriaceae

- EUCAST:

-

European Committee on Antimicrobial Susceptibility Testing

- FDA:

-

Food and Drug Administration

- HAP:

-

Hospital-acquired pneumonia

- HCW:

-

Healthcare workers

- ICU:

-

Intensive care unit

- IDSA:

-

Infectious Diseases Society of America

- KPC:

-

Klebsiella pneumoniae carbapenemase

- MDR:

-

Multidrug-resistant organisms

- MIC:

-

Minimum inhibitory concentration

- MITT:

-

Modified intention to treat

- MRSA:

-

Methicillin-resistant Staphylococcus aureus

- PK/PD:

-

Pharmacokinetic/pharmacodynamic

- SDD:

-

Selective digestive decontamination

- VAP:

-

Ventilator-associated pneumonia

- VIM:

-

Verona integron-mediated metallo-β-lactamase

- VRE:

-

Vancomycin-resistant enterococci

References

Nathan C, Cars O (2014) Antibiotic resistance—problems, progress, and prospects. N Engl J Med 371:1761–1763

Barber M, Rozwadowska-Dowzenki M (1948) Infection by penicillin-resistant staphylococci. Lancet 2:641–644

Bassetti M, Nicolau DP, Calandra T (2014) What’s new in antimicrobial use and resistance in critically ill patients? Intensive Care Med 40:422–426

WHO (2014) Antimicrobial resistance: global report on surveillance. http://www.who.int/drugresistance/documents/surveillancereport/en/

ECDC (2013) The ECDC EARS-Net report 2013. http://www.ecdc.europa.eu/en/publications/Publications/antimicrobial-resistance-surveillance-europe-2013.pdf

Magiorakos AP, Srinivasan A, Carey RB, Carmeli Y, Falagas ME, Giske CG, Harbarth S, Hindler JF, Kahlmeter G, Olsson-Liljequist B, Paterson DL, Rice LB, Stelling J, Struelens MJ, Vatopoulos A, Weber JT, Monnet DL (2012) Multidrug-resistant, extensively drug-resistant and pandrug-resistant bacteria: an international expert proposal for interim standard definitions for acquired resistance. Clin Microbiol Infect 18:268–281

Razazi K, Derde LP, Verachten M, Legrand P, Lesprit P, Brun-Buisson C (2012) Clinical impact and risk factors for colonization with extended-spectrum β-lactamase-producing bacteria in the intensive care unit. Intensive Care Med 38(11):1769–1778

Woodford N (2008) Successful, multiresistant bacterial clones. J Antimicrob Chemother 61:233–234

Sundqvist M, Geli P, Andersson DI, Sjölund-Karlsson M, Runehagen A, Cars H, Abelson-Storby K, Cars O, Kahlmeter G (2010) Little evidence for reversibility of trimethoprim resistance after a drastic reduction in trimethoprim use. J Antimicrob Chemother 65(2):350–360

ESCMID Conference on Reviving Old Antibiotics (2014) 22–24 October 2014, Vienna, Austria. https://www.escmid.org/research_projects/escmid_conferences/reviving_old_antibiotics/scientific_programme/. Accessed 11 Mar 2015

Buchan BW, Ledeboer NA (2014) Emerging technologies for the clinical microbiology laboratory. Clin Microbiol Rev 27(4):783–822

La Scola B, Raoult D (2009) Direct identification of bacteria in positive blood culture bottles by matrix-assisted laser desorption time-of-flight mass spectrometry. PLoS One 4:e8041

The European Committee on Antimicrobial Susceptibility Testing (EUCAST). http://www.eucast.org. Accessed 11 Mar 2015

The Clinical Laboratory Standards Institute. http://www.clsi.org. Accessed 11 Mar 2015

Clinical breakpoints from the European Committee on Antimicrobial Susceptibility Testing. http://www.eucast.org/clinical_breakpoints/. Accessed 11 Mar 2015

Dellit TH, Owens RC, McGowan JE Jr, Gerding DN, Weinstein RA, Burke JP, Huskins WC, Paterson DL, Fishman NO, Carpenter CF, Brennan PJ, Billeter M, Hooton TM, Infectious Diseases Society of America and the Society for Healthcare Epidemiology of America (2007) Guidelines for developing an institutional program to enhance antimicrobial stewardship. Clin Infect Dis 44:159–177

Dellinger RP, Levy MM, Rhodes A, Annane D, Gerlach H, Opal SM, Sevransky JE, Sprung CL, Douglas IS, Jaeschke R, Osborn TM, Nunnally ME, Townsend SR, Reinhart K, Kleinpell RM, Angus DC, Deutschman CS, Machado FR, Rubenfeld GD, Webb S, Beale RJ, Vincent JL, Moreno R, Surviving Sepsis Campaign Guidelines Committee including The Pediatric Subgroup (2013) Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock. Intensive Care Med 39:165–228

Levy MM, Rhodes A, Phillips GS, Townsend SR, Schorr CA, Beale R, Osborn T, Lemeshow S, Chiche JD, Artigas A, Dellinger RP (2014) Surviving Sepsis Campaign: association between performance metrics and outcomes in a 7.5-year study. Intensive Care Med 40:1623–1633

Timsit JF, Harbarth S, Carlet J (2014) De-escalation as a potential way of reducing antibiotic use and antimicrobial resistance in ICU. Intensive Care Med 40:1580–1582

Luyt CE, Bréchot N, Trouillet JL, Chastre J (2014) Antibiotic stewardship in the intensive care unit. Crit Care 18:480

Kaki R, Elligsen M, Walker S, Simor A, Palmay L, Daneman N (2011) Impact of antimicrobial stewardship in critical care: a systematic review. J Antimicrob Chemother 66:1223–1230

Chalfine A, Kitzis MD, Bezie Y, Benali A, Perniceni L, Nguyen JC, Dumay MF, Gonot J, Rejasse G, Goldstein F, Carlet J, Misset B (2012) Ten-year decrease of acquired methicillin-resistant Staphylococcus aureus (MRSA) bacteremia at a single institution: the result of a multifaceted program combining cross-transmission prevention and antimicrobial stewardship. Antimicrob Resist Infect Control 1:18

Garnacho-Montero J, Gutierrez- Pizarraya A, Escoresca-Ortega A et al (2013) De-escalation of empirical therapy is associated with lower mortality in patients with severe sepsis and septic shock. Intensive Care Med 39:2237

Kumar A, Roberts D, Wood KE et al (2006) Duration of hypotension before initiation of effective antimicrobial therapy is the critical determinant of survival in human septic shock. Crit Care Med 34:1589–1596

Tabah A, Koulenti D, Laupland K et al (2012) Characteristics and determinants of outcome of hospital-acquired bloodstream infections in intensive care units: the EUROBACT International Cohort Study. Intensive Care Med 38:1930–1945

Ferrer R, Artigas A, Suarez D, Palencia E, Levy MM, Arenzana A, Perez XL, Sirvent JM (2009) Effectiveness of treatments for severe sepsis: a prospective, multicenter, observational study. Am J Respir Crit Care Med 180:861–866

Kumar A, Ellis P, Arabi Y, Roberts D et al (2009) Initiation of inappropriate antimicrobial therapy results in a fivefold reduction of survival in human septic shock. Chest 136:1237–1248

Zilberberg MD, Shorr AF, Micek ST, Vazquez-Guillamet C, Kollef MH (2014) Multi-drug resistance, inappropriate initial antibiotic therapy and mortality in Gram-negative severe sepsis and septic shock: a retrospective cohort study. Crit Care 18(6):596

Aliberti S, Cilloniz C, Chalmers JD et al (2013) Multidrug-resistant pathogens in hospitalised patients coming from the community with pneumonia: a European perspective. Thorax 68:997–999

Kollef MH, Sherman G, Ward S et al (1999) Inadequate antimicrobial treatment of infections: a risk factor for hospital mortality among critically ill patients. Chest 115:462–474

Gonçalves-Pereira J, Pereira JM, Ribeiro O, Baptista JP, Froes F, Paiva JA (2014) Impact of infection on admission and of process of care on mortality of patients admitted to the intensive care unit: the INFAUCI study. Clin Microbiol Infect. doi:10.1111/1469-0691.12738

Prkno A, Wacker C, Brunkhorst FM, Schlattmann P (2013) Procalcitonin-guided therapy in intensive care unit patients with severe sepsis and septic shock—a systematic review and meta-analysis. Crit Care 17(6):R291

Paul M, Benuri-Silbiger I, Soares-Weiser K, Leibovici L (2004) Beta lactam monotherapy versus beta lactam-aminoglycoside combination therapy for sepsis in immunocompetent patients: systematic review and meta-analysis of randomised trials. BMJ 328(7441):668

Heyland DK, Dodek P, Muscedere J, Day A, Cook D, Canadian Critical Care Trials Group (2008) Randomized trial of combination versus monotherapy for the empiric treatment of suspected ventilator-associated pneumonia. Crit Care Med 36(3):737–744

Brunkhorst FM, Oppert M, Marx G, Bloos F, Ludewig K, Putensen C, Nierhaus A, Jaschinski U, Meier-Hellmann A, Weyland A, Gründling M, Moerer O, Riessen R, Seibel A, Ragaller M, Büchler MW, John S, Bach F, Spies C, Reill L, Fritz H, Kiehntopf M, Kuhnt E, Bogatsch H, Engel C, Loeffler M, Kollef MH, Reinhart K, Welte T, German Study Group Competence Network Sepsis (SepNet) (2012) Effect of empirical treatment with moxifloxacin and meropenem vs meropenem on sepsis-related organ dysfunction in patients with severe sepsis: a randomized trial. JAMA 307(22):2390–2399

Micek ST, Welch EC, Khan J, Pervez M, Doherty JA, Reichley RM, Kollef MH (2010) Empiric combination antibiotic therapy is associated with improved outcome against sepsis due to Gram-negative bacteria: a retrospective analysis. Antimicrob Agents Chemother 54(5):1742–1748

Kumar A, Zarychanski R, Light B, Parrillo J, Maki D, Simon D, Laporta D, Lapinsky S, Ellis P, Mirzanejad Y, Martinka G, Keenan S, Wood G, Arabi Y, Feinstein D, Kumar A, Dodek P, Kravetsky L, Doucette S, Cooperative Antimicrobial Therapy of Septic Shock (CATSS) Database Research Group (2010) Early combination antibiotic therapy yields improved survival compared with monotherapy in septic shock: a propensity-matched analysis. Crit Care Med 38(9):1773–1785

Tumbarello M, Viale P, Viscoli C, Trecarichi EM, Tumietto F, Marchese A, Spanu T, Ambretti S, Ginocchio F, Cristini F, Losito AR, Tedeschi S, Cauda R, Bassetti M (2012) Predictors of mortality in bloodstream infections caused by Klebsiella pneumoniae carbapenemase-producing K. pneumoniae: importance of combination therapy. Clin Infect Dis 55(7):943–950

Qureshi ZA, Paterson DL, Potoski BA, Kilayko MC, Sandovsky G, Sordillo E, Polsky B, Adams-Haduch JM, Doi Y (2012) Treatment outcome of bacteremia due to KPC-producing Klebsiella pneumoniae: superiority of combination antimicrobial regimens. Antimicrob Agents Chemother 56(4):2108–2113

Samonis G, Maraki S, Karageorgopoulos DE, Vouloumanou EK, Falagas ME (2012) Synergy of fosfomycin with carbapenems, colistin, netilmicin, and tigecycline against multidrug-resistant Klebsiella pneumoniae, Escherichia coli, and Pseudomonas aeruginosa clinical isolates. Eur J Clin Microbiol Infect Dis 31:695–701

Durante-Mangoni E, Grammatikos A, Utili R, Falagas ME (2009) Do we still need the aminoglycosides? Int J Antimicrob Agents 33:201–205

De Waele JJ, Ravyts M, Depuydt P, Blot SI, Decruyenaere J, Vogelaers D (2010) De-escalation after empirical meropenem treatment in the intensive care unit: fiction or reality? J Crit Care 25:641–646

Gonzalez L, Cravoisy A, Barraud D, Conrad M, Nace L, Lemarie J, Bollaert PE, Gibot S (2013) Factors influencing the implementation of antibiotic de-escalation and impact of this strategy in critically ill patients. Crit Care 17:R140

Mokart D, Slehofer G, Lambert J, Sannini A, Chow-Chine L, Brun JP, Berger P, Duran S, Faucher M, Blache JL, Saillard C, Vey N, Leone M (2014) De-escalation of antimicrobial treatment in neutropenic patients with severe sepsis: results from an observational study. Intensive Care Med 40:41–49

De Waele JJ, Bassetti M, Martin-Loeches I (2014) Impact of de-escalation on ICU patients’ prognosis. Intensive Care Med 40:1583–1585

Leone M, Bechis C, Baumstarck K, Lefrant JY, Albanese J, Jaber S, Lepape A, Constantin JM, Papazian L, Bruder N, Allaouchiche B, Bezulier K, Antonini F, Textoris J, Martin C (2014) De-escalation versus continuation of empirical antimicrobial treatment in severe sepsis: a multicenter non-blinded randomized noninferiority trial. Intensive Care Med 40:1399–1408

Kollef MH (2014) What can be expected from antimicrobial de-escalation in the critically ill? Intensive Care Med 40:92–95

Thabit AK, Crandon JL, Nicolau DP (2015) Antimicrobial resistance: impact on clinical and economic outcomes and the need for new antimicrobials. Exp Opin Pharmacother 16:159–177

Roberts JA, Abdul-Aziz MH, Lipman J, Mouton JW, Vinks AA, Felton TW, Hope WW, Farkas A, Neely MN, Schentag JJ, Drusano G, Frey OR, Theuretzbacher U, Kuti JL, International Society of Anti-Infective Pharmacology and the Pharmacokinetics and Pharmacodynamics Study Group of the European Society of Clinical Microbiology and Infectious Diseases (2014) Individualised antibiotic dosing for patients who are critically ill: challenges and potential solutions. Lancet Infect Dis 14:498–509

Udy AA, Baptista JP, Lim NL, Joynt GM, Jarrett P, Wockner L, Boots RJ, Lipman J (2014) Augmented renal clearance in the ICU: results of a multicenter observational study of renal function in critically ill patients with normal plasma creatinine concentrations. Crit Care Med 42:520–527

Roberts JA, Paul SK, Akova M, Bassetti M, De Waele JJ, Dimopoulos G, Kaukonen KM, Koulenti D, Martin C, Montravers P, Rello J, Rhodes A, Starr T, Wallis SC, Lipman J, DALI Study (2014) DALI: defining antibiotic levels in intensive care unit patients: are current β-lactam antibiotic doses sufficient for critically ill patients? Clin Infect Dis 58:1072–1083

Nicasio AM, Eagye KJ, Nicolau DP, Shore E, Palter M, Pepe J, Kuti JL (2010) A pharmacodynamic-based clinical pathway for empiric antibiotic choice in patients infected with ventilator-associated pneumonia. J Crit Care 25:69–77

MacVane SH, Kuti JL, Nicolau DP (2014) Prolonging β-lactam infusion: a review of the rationale and evidence, and guidance for implementation. Int J Antimicrob Agents 43(2):105–113

Eggimann P, Pittet D (2001) Infection control in the ICU. Chest 120:2059–2093

Siegel JD, Rhinehart E, Jackson M, Chiarello L, Health Care Infection Control Practices Advisory Committee (2007) 2007 guideline for isolation precautions: preventing transmission of infectious agents in health care settings. Am J Infect Control 35:S65–S164

Price JR, Golubchik T, Cole K, Wilson DJ, Crook DW, Thwaites GE, Bowden R, Walker AS, Peto TE, Paul J, Llewelyn MJ (2014) Whole-genome sequencing shows that patient-to-patient transmission rarely accounts for acquisition of Staphylococcus aureus in an intensive care unit. Clin Infect Dis 58(5):609–618

Longtin Y, Sax H, Allegranzi B, Schneider F, Pittet D, Videos in clinical medicine (2011) Hand hygiene. N Engl J Med 364:e24

Derde LP, Cooper BS, Goossens H, Malhotra-Kumar S, Willems RJ, Gniadkowski M, Hryniewicz W, Empel J, Dautzenberg MJ, Annane D, Aragão I, Chalfine A, Dumpis U, Esteves F, Giamarellou H, Muzlovic I, Nardi G, Petrikkos GL, Tomic V, Martí AT, Stammet P, Brun-Buisson C, Bonten MJ, MOSAR WP3 Study Team (2014) Interventions to reduce colonisation and transmission of antimicrobial-resistant bacteria in intensive care units: an interrupted time series study and cluster randomised trial. Lancet Infect Dis 14:31–39

Daneman N, Sarwar S, Fowler RA, Cuthbertson BH, SuDDICU Canadian Study Group (2013) Effect of selective decontamination on antimicrobial resistance in intensive care units: a systematic review and meta-analysis. Lancet Infect Dis 13(4):328–341

Bassetti M, Righi E (2014) SDD and colistin resistance: end of a dream? Intensive Care Med 40(7):1066–1067

Spellberg B, Guidos R, Gilbert D, Bradley J, Boucher HW, Scheld WM, Bartlett JG, Edwards J Jr, Infectious Diseases Society of America (2008) The epidemic of antibiotic-resistant infections: a call to action for the medical community from the Infectious Diseases Society of America. Clin Infect Dis 46:155–164

Piddock LJ (2012) The crisis of no new antibiotics—what is the way forward? Lancet Infect Dis 12:249–253

Infectious Diseases Society of America (2010) The 10 × ´20 initiative: pursuing a global commitment to develop 10 new antibacterial drugs by 2020. Clin Infect Dis 50:1081–1083

The Pew Charitable Trusts (2014) Tracking the pipeline of antibiotics in development. http://www.pewtrusts.org/en/research-and-analysis/issue-briefs/2014/03/12/tracking-the-pipeline-of-antibiotics-in-development. Accessed September 2014

Poulakou G, Bassetti M, Righi E, Dimopoulos G (2014) Current and future treatment options for infections caused by multidrug-resistant Gram-negative pathogens. Future Microb 9:1053–1069

Luyt CE, Clavel M, Guntupalli K (2009) Pharmacokinetics and lung delivery of PDDS-aerosolize amikacin (NKTR-061) in intubated and mechanically ventilated patients with nosocomial pneumonia. Crit Care 13(6):R200 (iol 9:1053–1069)

Zahar JR, Lortholary O, Martin C, Potel G, Plesiat P, Nordmann P (2009) Addressing the challenge of extended-spectrum beta-lactamases. Curr Opin Investig Drugs 10:172–180

Rodríguez-Baño J, Picón E, Navarro MD, López-Cerero L, Pascual A, ESBL-REIPI Group (2012) Impact of changes in CLSI and EUCAST breakpoints for susceptibility in bloodstream infections due to extended-spectrum β-lactamase-producing Escherichia coli. Clin Microbiol Infect 18:894–900

Shiber S, Yahav D, Avni T, Leibovici L, Paul M (2015) β-Lactam/β-lactamase inhibitors versus carbapenems for the treatment of sepsis: systematic review and meta-analysis. J Antimicrob Chemother 70:41–47

Rodríguez-Baño J, Picón E, Gijón P, Hernández JR, Ruíz M, Peña C, Almela M, Almirante B, Grill F, Colomina J, Giménez M, Oliver A, Horcajada JP, Navarro G, Coloma A, Pascual A, Spanish Network for Research in Infectious Diseases (REIPI) (2010) Community-onset bacteremia due to extended-spectrum beta-lactamase-producing Escherichia coli: risk factors and prognosis. Clin Infect Dis 50:40–48

Vardakas KZ, Tansarli GS, Rafailidis PI, Falagas ME (2012) Carbapenems versus alternative antibiotics for the treatment of bacteraemia due to Enterobacteriaceae producing extended-spectrum β-lactamases: a systematic review meta-analysis of randomized controlled trials. J Antimicrob Chemother 67:2793–2803

Lee NY, Lee CC, Huang WH, Tsui KC, Hsueh PR, Ko WC (2013) Cefepime therapy for monomicrobial bacteremia caused by cefepime-susceptible extended-spectrum beta-lactamase-producing Enterobacteriaceae: MIC matters. Clin Infect Dis 56:488–495

Goethaert K, Van Looveren M, Lammens C, Jansens H, Baraniak A, Gniadkowski M, Van Herck K, Jorens PG, Demey HE, Ieven M, Bossaert L, Goossens H (2006) High-dose cefepime as an alternative treatment for infections caused by TEM-24 ESBL-producing Enterobacter aerogenes in severely-ill patients. Clin Microbiol Infect 12:56–62

Chopra T, Marchaim D, Veltman J, Johnson P, Zhao JJ, Tansek R, Hatahet D, Chaudhry K, Pogue JM, Rahbar H, Chen TY, Truong T, Rodriguez V, Ellsworth J, Bernabela L, Bhargava A, Yousuf A, Alangaden G, Kaye KS (2012) Impact of cefepime therapy on mortality among patients with bloodstream infections caused by extended-spectrum-β-lactamase-producing Klebsiella pneumoniae and Escherichia coli. Antimicrob Agents Chemother 56:3936–3942

Munoz-Price LS, Poirel L, Bonomo RA, Schwaber MJ, Daikos GL, Cormican M, Cornaglia G, Garau J, Gniadkowski M, Hayden MK, Kumarasamy K, Livermore DM, Maya JJ, Nordmann P, Patel JB, Paterson DL, Pitout J, Villegas MV, Wang H, Woodford N, Quinn JP (2013) Clinical epidemiology of the global expansion of Klebsiella pneumoniae carbapenemases. Lancet Infect Dis 13:785–796

Tumbarello M, Trecarichi EM, Tumietto F, Del Bono V, De Rosa FG, Bassetti M, Losito AR, Tedeschi S, Saffioti C, Corcione S, Giannella M, Raffaelli F, Pagani N, Bartoletti M, Spanu T, Marchese A, Cauda R, Viscoli C, Viale P (2014) Predictive models for identification of hospitalized patients harboring KPC-producing Klebsiella pneumoniae. Antimicrob Agents Chemother 58:3514–3520

Tzouvelekis LS, Markogiannakis A, Piperaki E, Souli M, Daikos GL (2014) Treating infections caused by carbapenemase-producing Enterobacteriaceae. Clin Microbiol Infect 20:862–872

Daikos GL, Tsaousi S, Tzouvelekis LS, Anyfantis I, Psichogiou M, Argyropoulou A, Stefanou I, Sypsa V, Miriagou V, Nepka M, Georgiadou S, Markogiannakis A, Goukos D, Skoutelis A (2014) Carbapenemase-producing Klebsiella pneumoniae bloodstream infections: lowering mortality by antibiotic combination schemes and the role of carbapenems. Antimicrob Agents Chemother 58:2322–2328

Dubrovskaya Y, Chen TY, Scipione MR, Esaian D, Phillips MS, Papadopoulos J, Mehta SA (2013) Risk factors for treatment failure of polymyxin B monotherapy for carbapenem-resistant Klebsiella pneumoniae infections. Antimicrob Agents Chemother 57:5394–5397

Pontikis K, Karaiskos I, Bastani S, Dimopoulos G, Kalogirou M, Katsiari M, Oikonomou A, Poulakou G, Roilides E, Giamarellou H (2014) Outcomes of critically ill intensive care unit patients treated with fosfomycin for infections due to pandrug-resistant and extensively drug-resistant carbapenemase-producing Gram-negative bacteria. Int J Antimicrob Agents 43:52–59

Plachouras D, Karvanen M, Friberg LE, Papadomichelakis E, Antoniadou A, Tsangaris I, Karaiskos I, Poulakou G, Kontopidou F, Armaganidis A, Cars O, Giamarellou H (2009) Population pharmacokinetic analysis of colistin methanesulfonate and colistin after intravenous administration in critically ill patients with infections caused by gram-negative bacteria. Antimicrob Agents Chemother 53:3430–3436

Garonzik SM, Li J, Thamlikitkul V, Paterson DL, Shoham S, Jacob J, Silveira FP, Forrest A, Nation RL (2011) Population pharmacokinetics of colistin methanesulfonate and formed colistin in critically ill patients from a multicenter study provide dosing suggestions for various categories of patients. Antimicrob Agents Chemother 55:3284–3294

Dalfino L, Puntillo F, Mosca A, Monno R, Spada ML, Coppolecchia S, Miragliotta G, Bruno F, Brienza N (2012) High-dose, extended-interval colistin administration in critically ill patients: is this the right dosing strategy? A preliminary study. Clin Infect Dis 54:1720–1726

Ramirez J, Dartois N, Gandjini H, Yan JL, Korth-Bradley J, McGovern PC (2013) Randomized phase 2 trial to evaluate the clinical efficacy of two high-dosage tigecycline regimens versus imipenem–cilastatin for treatment of hospital-acquired pneumonia. Antimicrob Agents Chemother 57:1756–1762

Giamarellou H, Galani L, Baziaka F, Karaiskos I (2013) Effectiveness of a double-carbapenem regimen for infections in humans due to carbapenemase-producing pandrug-resistant Klebsiella pneumoniae. Antimicrob Agents Chemother 57:2388–2390

Giannella M, Trecarichi EM, De Rosa FG, Del Bono V, Bassetti M, Lewis RE, Losito AR, Corcione S, Saffioti C, Bartoletti M, Maiuro G, Cardellino CS, Tedeschi S, Cauda R, Viscoli C, Viale P, Tumbarello M (2014) Risk factors for carbapenem-resistant Klebsiella pneumoniae bloodstream infection among rectal carriers: a prospective observational multicentre study. Clin Microbiol Infect 20:1357–1362

Tumbarello M, De Pascale G, Trecarichi EM, Spanu T, Antonicelli F, Maviglia R, Pennisi MA, Bello G, Antonelli M (2013) Clinical outcomes of Pseudomonas aeruginosa pneumonia in intensive care unit patients. Intensive Care Med 39:682–692

Eagye KJ, Banevicius MA, Nicolau DP (2012) Pseudomonas aeruginosa is not just in the intensive care unit any more: implications for empirical therapy. Crit Care Med 40:1329–1332

Bliziotis IA, Petrosillo N, Michalopoulos A, Samonis G, Falagas ME (2011) Impact of definite therapy with beta-lactam monotherapy or combination with an aminoglycoside or a quinolone for Pseudomonas aeruginosa Bacteremia. PLOS One 6:e2640

Zhanel GG, Chung P, Adam H, Zelenitsky S, Denisuik A, Schweizer F, Lagacé-Wiens PR, Rubinstein E, Gin AS, Walkty A, Hoban DJ, Lynch JP 3rd, Karlowsky JA (2014) Ceftolozane/tazobactam: a novel cephalosporin/β-lactamase inhibitor combination with activity against multidrug-resistant gram-negative bacilli. Drugs 74:31–51

Karagoz G, Kadanali A, Dede B, Sahin OT, Comoglu S, Altug SB, Naderi S (2014) Extensively drug-resistant Pseudomonas aeruginosa ventriculitis and meningitis treated with intrathecal colistin. Int J Antimicrob Agents 43(1):93–94

Garnacho-Montero J, Ortiz-Leyba C, Fernández-Hinojosa E, Aldabó-Pallás T, Cayuela A, Marquez-Vácaro JA, Garcia-Curiel A, Jiménez-Jiménez FJ (2005) Acinetobacter baumannii ventilator-associated pneumonia: epidemiological and clinical findings. Intensive Care Med 31:649–655

Martin-Loeches I, Deja M, Koulenti D, Dimopoulos G, Marsh B, Torres A, Niederman, Rello J, EU-VAP Study Investigators (2013) Potentially resistant microorganisms in intubated patients with hospital-acquired pneumonia: the interaction of ecology, shock and risk factors. Intensive Care Med 39(4):672–681

Dijkshoorn L, Nemec A, Seifert H (2007) An increasing threat in hospitals: multidrug-resistant Acinetobacter baumannii. Nat Rev Microbiol 5:939–951

Gales AC, Jones RN, Sader HS (2011) Contemporary activity of colistin and polymyxin B against a worldwide collection of Gram-negative pathogens: results from the SENTRY Antimicrobial Surveillance Program (2006–09). J Antimicrob Chemother 66:2070–2074

Jones RN, Flonta M, Gurler N, Cepparulo M, Mendes RE, Castanheira M (2014) Resistance surveillance program report for selected European nations. Diagn Microbiol Infect Dis 78:429–436

Sandri AM, Landersdorfer CB, Jacob J, Boniatti MM, Dalarosa MG, Falci DR, Behle TF, Bordinhão RC, Wang J, Forrest A, Nation RL, Li J, Zavascki AP (2013) Population pharmacokinetics of intravenous polymyxin B in critically ill patients: implications for selection of dosage regimens. Clin Infect Dis 57:524–531

Cai Y, Wang R, Liang B, Bai N, Liu Y (2011) Systematic review and meta-analysis of the effectiveness and safety of tigecycline for treatment of infectious disease. Antimicrob Agents Chemother 55:1162–1172

Prasad P, Sun J, Danner RL, Natanson C (2012) Excess deaths associated with tigecycline after approval based on noninferiority trials. Clin Infect Dis 54:1699–1709

Medicines and Healthcare Products Regulatory Agency (2011) Tigecycline: increased mortality in clinical trials—use only when other antibiotics are unsuitable. http://www.mhra.gov.uk/Safetyinformation/DrugSafetyUpdate/CON111761. Accessed 23 Dec 2014

De Pascale G, Montini L, Pennisi M, Bernini V, Maviglia R, Bello G, Spanu T, Tumbarello M, Antonelli M (2014) High dose tigecycline in critically ill patients with severe infections due to multidrug-resistant bacteria. Crit Care 18:R90

Durante-Mangoni E, Signoriello G, Andini R, Mattei A, De Cristoforo M, Murino P, Bassetti M, Malacarne P, Petrosillo N, Galdieri N, Mocavero P, Corcione A, Viscoli C, Zarrilli R, Gallo C, Utili R (2013) Colistin and rifampicin compared with colistin alone for the treatment of serious infections due to extensively drug-resistant Acinetobacter baumannii: a multicenter, randomized clinical trial. Clin Infect Dis 57:349–358

López-Cortés LE, Cisneros JM, Fernández-Cuenca F, Bou G, Tomás M, Garnacho-Montero J, Pascual A, Martínez-Martínez L, Vila J, Pachón J, Rodríguez Baño J, On behalf of the GEIH/REIPI-Ab2010 Group (2014) Monotherapy versus combination therapy for sepsis due to multidrug-resistant Acinetobacter baumannii: analysis of a multicentre prospective cohort. J Antimicrob Chemother 69:3119–3126

Petrosillo N, Giannella M, Antonelli M, Antonini M, Barsic B, Belancic L, Inkaya AC, De Pascale G, Grilli E, Tumbarello M, Akova M (2014) Clinical experience of colistin-glycopeptide combination in critically ill patients infected with Gram-negative bacteria. Antimicrob Agents Chemother 58:851–858

National Institute for Health and Care Excellence (2014) Hospital-acquired pneumonia caused by methicillin-resistant Staphylococcus aureus: telavancin. http://www.nice.org.uk/advice/esnm44. Accessed 23 December 2014

Bally M, Dendukuri N, Sinclair A, Ahern SP, Poisson M, Brophy J (2012) A network meta-analysis of antibiotics for treatment of hospitalised patients with suspected or proven meticillin-resistant Staphylococcus aureus infection. Int J Antimicrob Agents 40:479–495

Gurusamy KS, Koti R, Toon CD, Wilson P, Davidson BR (2013) Antibiotic therapy for the treatment of methicillin-resistant Staphylococcus aureus (MRSA) infections in surgical wounds. Cochrane Database Syst Rev 8:CD009726

Gurusamy KS, Koti R, Toon CD, Wilson P, Davidson BR (2013) Antibiotic therapy for the treatment of methicillin-resistant Staphylococcus aureus (MRSA) in non surgical wounds. Cochrane Database Syst Rev 11:CD010427

Logman JF, Stephens J, Heeg B, Haider S, Cappelleri J, Nathwani D, Tice A, van Hout BA (2010) Comparative effectiveness of antibiotics for the treatment of MRSA complicated skin and soft tissue infections. Curr Med Res Opin 26:1565–1578

Wunderink RG, Niederman MS, Kollef MH, Shorr AF, Kunkel MJ, Baruch A, McGee WT, Reisman A, Chastre J (2012) Linezolid in methicillin-resistant Staphylococcus aureus nosocomial pneumonia: a randomized, controlled study. Clin Infect Dis 54(5):621–629

Kalil AC, Van Schooneveld TC, Fey PD, Rupp ME (2014) Association between vancomycin minimum inhibitory concentration and mortality among patients with Staphylococcus aureus bloodstream infections: a systematic review and meta-analysis. JAMA 312:1552–1564

Sánchez García M, De la Torre MA, Morales G, Peláez B, Tolón MJ, Domingo S, Candel FJ, Andrade R, Arribi A, García N, Martínez Sagasti F, Fereres J, Picazo J (2010) Clinical outbreak of linezolid-resistant Staphylococcus aureus in an intensive care unit. JAMA 303(22):2260–2264

van Hal SJ, Paterson DL, Lodise TP (2013) Systematic review and meta-analysis of vancomycin-induced nephrotoxicity associated with dosing schedules that maintain troughs between 15 and 20 milligrams per liter. Antimicrob Agents Chemother 57:734–744

Public Health England (2013) Updated guidance on the management and treatment of C. difficile infection. https://www.gov.uk/government/publications/clostridium-difficile-infection-guidance-on-management-and-treatment. Accessed 23 December 2014

Wilcox MH (2014) The trials and tribulations of treating Clostridium difficile infection-one step backward, one step forward, but still progress. Clin Infect Dis 59:355–357

Britt NS, Steed ME, Potter EM, Clough LA (2014) Tigecycline for the treatment of severe and severe complicated Clostridium difficile infection. Infect Dis Ther 3:321–331

Herpers BL, Vlaminckx B, Burkhardt O et al (2009) Intravenous tigecycline as adjunctive or alternative therapy for severe refractory Clostridium difficile infection. Clin Infect Dis 48(12):1732–1735